I’ve spent the last six months heads down building a new machine learning tool called Weights and Biases with my longtime cofounder Chris Van Pelt, my new cofounder and friend Shawn Lewis and brave early users at Open AI, Toyota Research, Uber and others. Now that it’s public I wanted to talk a little bit about why I’m (still) so excited about building machine learning tools.

I remember the magic I felt training my first machine learning algorithm. It was 2002 and I was taking Stanford’s 221 class from Daphne Koller. I had procrastinated so I spent 72 hours straight in the computer lab building a reinforcement learning algorithm that played game after game of Othello against itself. The algorithm started off incredibly dumb, but I kept fiddling and watching the computer struggle to play on my little ASCII terminal. In the middle of the night, something clicked and it started getting better and better, blowing past my own skill level. It felt like breathing life into a machine. I was hooked.

When I worked as a TA in Daphne’s lab a few years later during grad school, it seemed like nothing in ML was working. The now famous NIPS conference had just a few hundred attendees. I remember Mike Montemerlo and Sebastian Thrun had to work to get skeptical grad students excited about a self-driving car project. Out in the world, AI was mostly being used to rank ads.

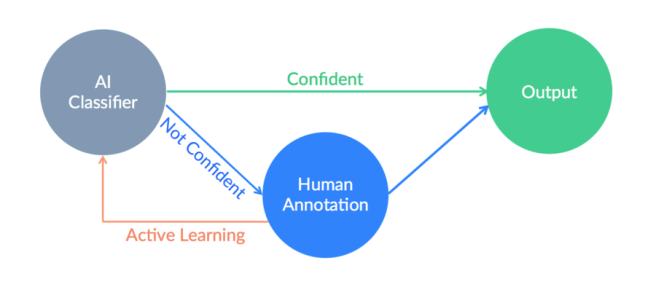

After working on search for a few years, by 2007 it was clear to me that the biggest problem in Machine Learning in every company and lab was access to training data. I left my job to start CrowdFlower (now Figure Eight) to solve that problem. Every researcher knew that access to training data was a major problem, but outside of research it wasn’t yet clear at all. We made tens of millions of dollars creating training data sets for everything from eBay’s product recommendations to instagram’s support ticket classification but until around 2016, nearly all VCs were adamant that machine learning wasn’t a legitimate vertical worth targeting.

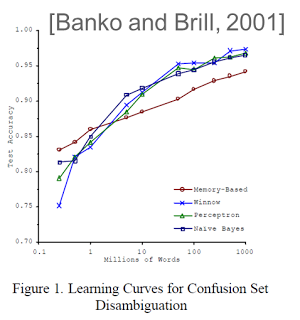

Ten years later the company is thriving and spawned a field full of competitors. But it turned out that one of our core doctrines was wrong. My strong bias was always that algorithms don’t matter. Over and over I had worked with people who promised a magic new breakthrough algorithm that would fundamentally change the way AI worked. It was never true. It was painful watching companies pour resources into improving algorithms when simply collecting more training data would have had a much bigger impact.

The first sign something had changed came in 2012, when I heard from Anthony Goldbloom that neural nets — the darling of 70s-era AI professors — were winning Kaggle competitions. In 2013 and 2014 we started seeing an explosion of image labeling tasks at CrowdFlower. It became undeniable that these “new” algorithms that people were calling deep learning were working in practical ways on applications where ML had never worked before.

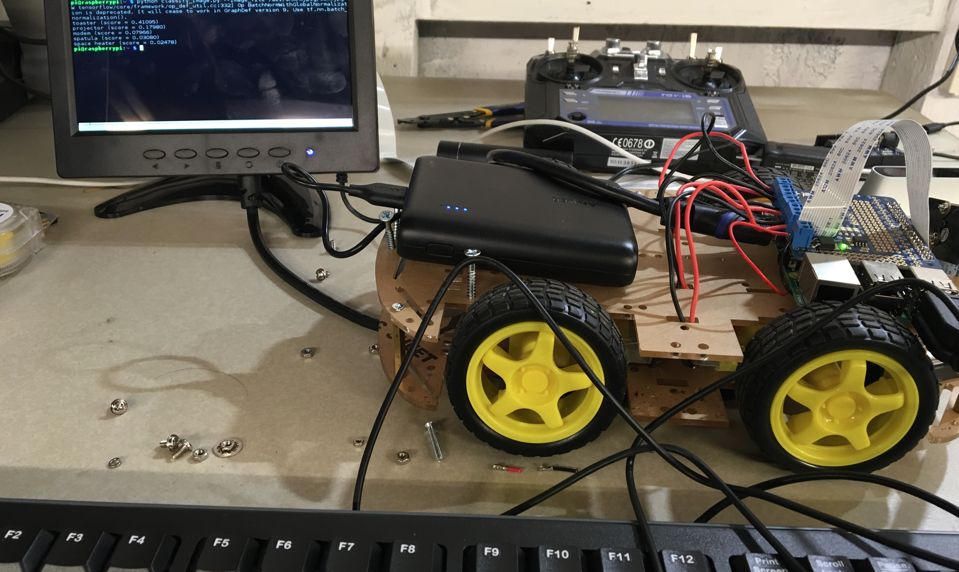

I stepped down as CEO of Figure Eight and went about building my technical chops in deep learning. I spent days in my garage, building robots running TensorFlow on a Raspberry Pi. My friend Adrien Treuille and I locked ourselves in an Airbnb and implemented backpropagation for perceptrons and then Convolutional Neural Nets and then more complicated models. To sharpen my thinking, I taught “introduction to deep learning classes” to thousands of engineers. I somehow got myself an internship at OpenAI and got to work with some of the best people in the world. I pair programmed with twenty-four year old grad students who intimidated the hell out of me.

Stepping back into being a practitioner gave me a view on a new set of problems. When you write (non-AI/ML) code directly, you can walk through what it does. You can diff it and version it in a meaningful way. Debugging is never easy, but we have seventy years of debugging expertise behind us and we’ve built an amazing array of tools and best practices to do it well.

With machine learning, we’re starting over. Instead of programming the computer directly, we write code that guides the computer to create a model. We can’t modify the model directly or even easily understand how it does what it does. Diffs between versions of the model don’t make sense to humans: if I change the functionality even slightly, every single bit in the model will likely be different. From my experience at Figure Eight, I knew all the machine learning teams were having the same problem. All of the problems machine learning always had are becoming worse with deep learning. Training data is still critically important, but because of this poor tooling, many teams that should be deploying a new model every day are lucky if they deploy twice a month.

I started Weights and Biases because, for the second time in my career, I have deep conviction about what the AI field needs. Ten years ago training data was the biggest problem holding back real world machine learning. Today, the biggest pain is a lack of basic software and best practices to manage a completely new style of coding. Andrej Karpathy describes machine learning as the new kind of programming that needs a reinvented IDE. Pete Warden writes about AI’s reproducibility crisis — there’s no version control for machine learning models and it’s incredibly hard to reproduce one’s own work let alone some else’s. As machine learning rapidly evolves from research projects to critical real-world deployed software we suddenly have an acute need for a new set of developer tools.

Working on deep learning, I had that same sense of wonder — that I was breathing life into a machine — that had first hooked me on to machine learning. Machine learning has the potential to solve the world’s biggest problems. In just the past couple of years, image recognition went from unsolvable to solved, voice recognition became a household appliance. Like Pete Warden said, software is eating the world and deep learning is eating software.

I love working with people working on machine learning. In my view the work they do has the highest potential to impact the world and I want to build them tools to help them do that. Like every powerful technology machine learning will create lots of problems to wrestle with. Every machine learning practitioner I know wants their models to be safe, fair and reliable. Today, that’s really hard to do.

You can’t paint well with a crappy paintbrush, you can’t write code well in a crappy IDE, and you can’t build and deploy great deep learning models with the tools we have now. I can’t think of any more important goal than changing that.

Check out Weights & Biases at wandb.com.

Thanks Noga Leviner, Michael E. Driscoll, Yanda Erlich,Will Smith and James Cham for feedback on early drafts.

If you haven’t tried it, it’s basically Gmail for your to do lists.

If you haven’t tried it, it’s basically Gmail for your to do lists.

You must be logged in to post a comment.